Blog Post

Nov 13, 2024

Want updates on the Threshold 2030 Report in Q1?

You may opt out at any time. View our Privacy Policy.

See the final results from our Threshold 2030 Conference here:

Threshold 2030: Conference Report

These are some overall takeaways from our Threshold 2030 conference. We’ll discuss the purpose of the conference, our methodology, and attendee feedback and reflections. This is not the final report intended to summarize concrete results, which is published at the link above.

Conference Overview

In October 2024, we organized a 30-person expert conference to rapidly evaluate the economic impacts of frontier AI technologies by 2030. This event was hosted jointly by Convergence Analysis and Metaculus, with financial support from the Future of Life Institute.

The conference heavily leveraged scenario modeling to produce concrete, detailed outputs about potential AI futures. We asked our attendees to consider a set of three plausible scenarios regarding the trajectory of AI development. Based on these scenarios, we had attendees conduct:

Worldbuilding: Attendees created and discussed detailed, realistic descriptions of global societies and economies in 2030, focusing on their domains of expertise.

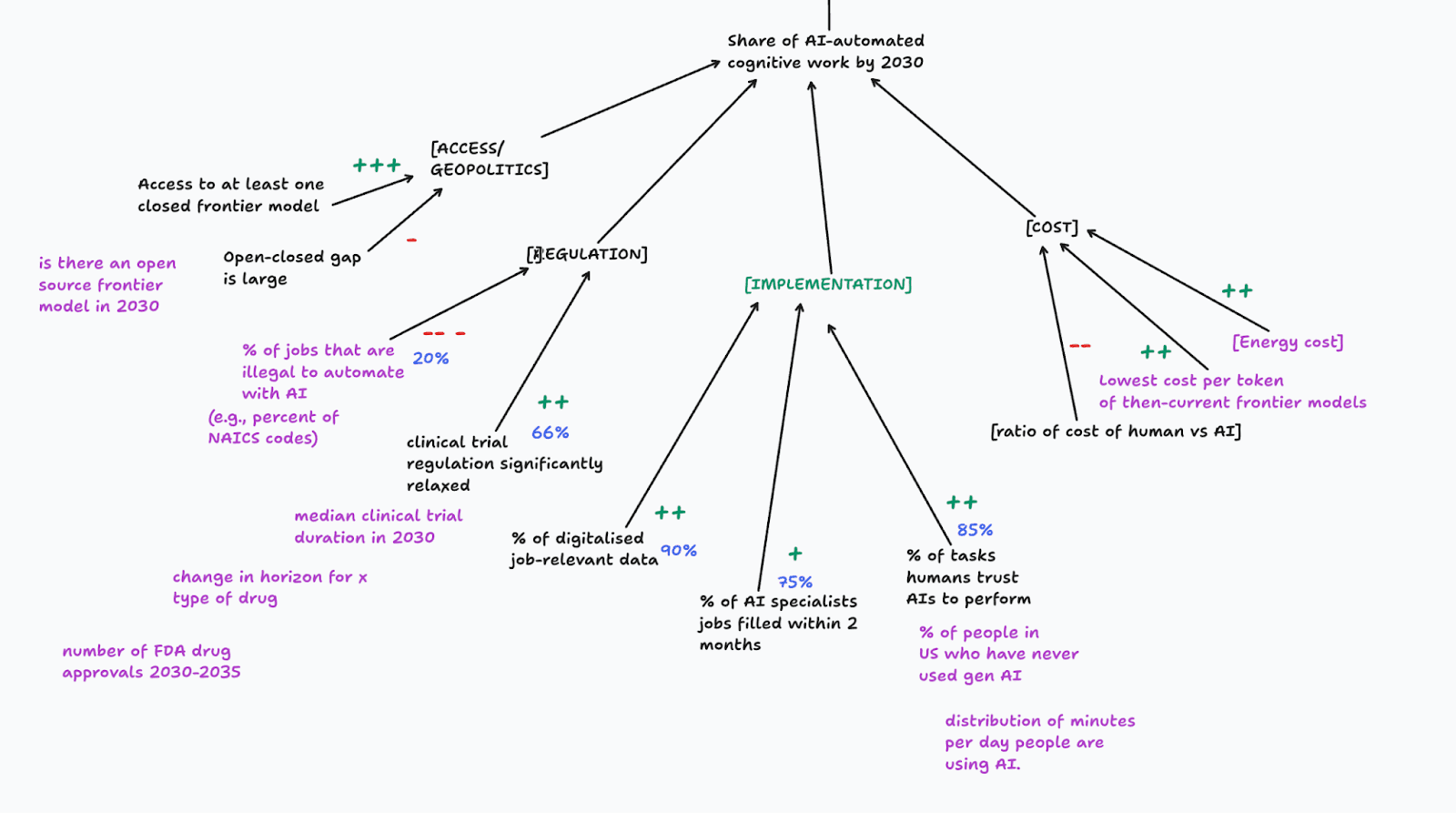

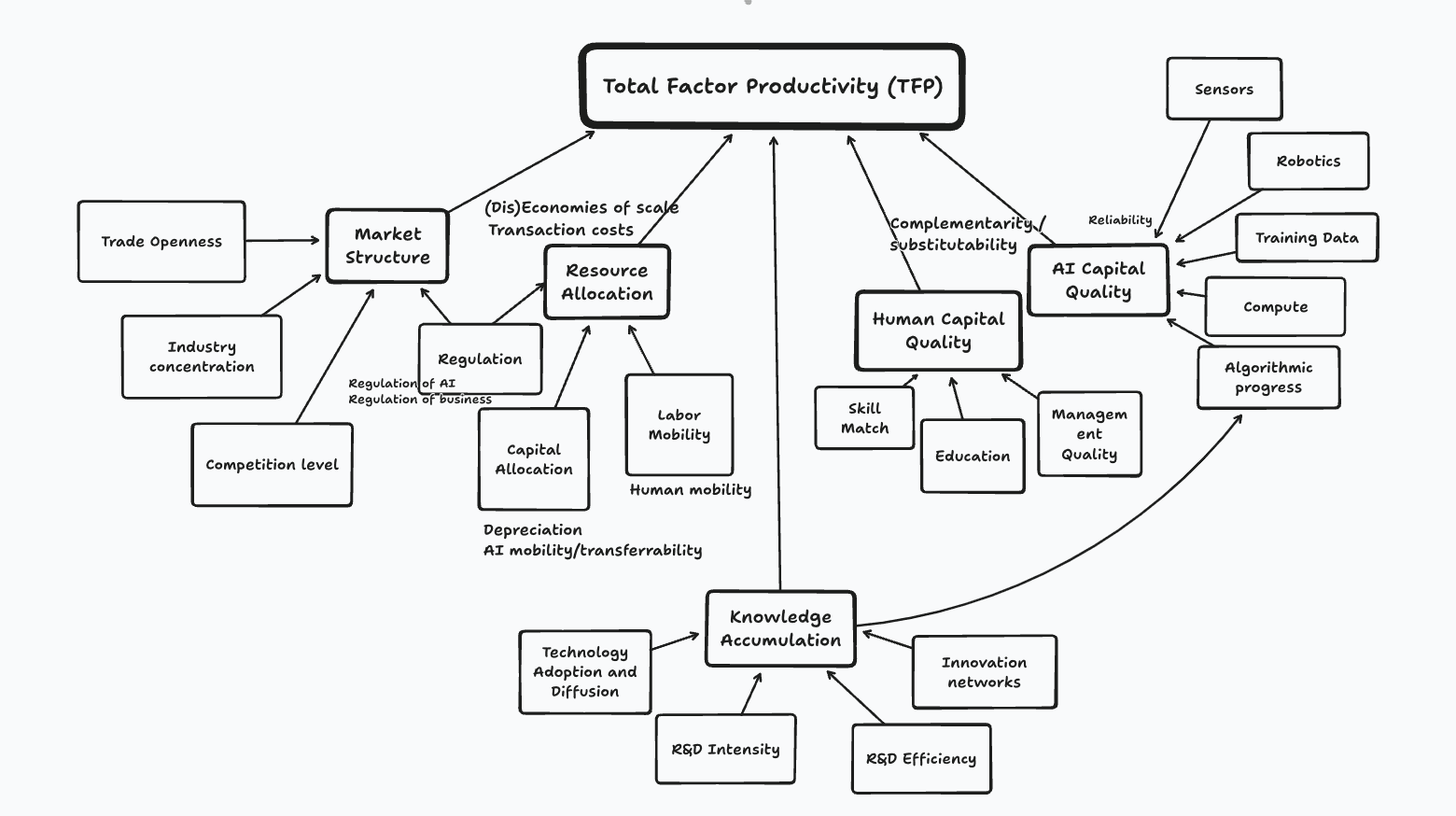

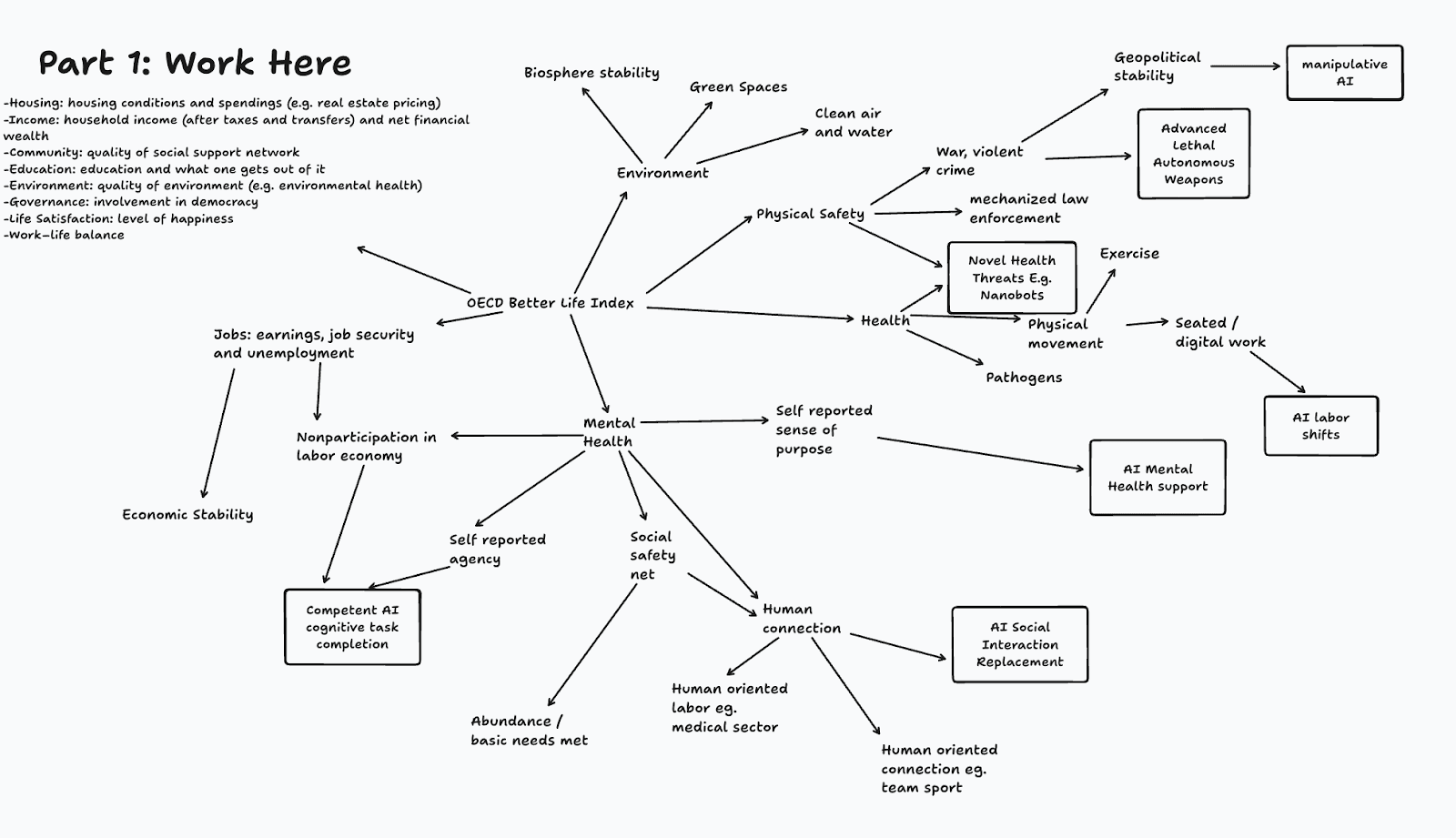

Economic Causal Modeling: Attendees created economic diagrams describing variables (e.g. labor automation, productivity) and observables that impact top-level measures of economic health (e.g. growth, inequality, quality of life).

Forecasting: Attendees applied Tetlockian forecasting techniques to key economic questions and generated useful forecasting questions to track.

Based on over 150+ pages of notes from this conference, Convergence Analysis will produce a public report summarizing key takeaways from these sessions, including:

Common themes and disagreements among experts for economic outcomes in rapid AI timelines

Explainers of the economic models generated by attendees

Forecasts and novel forecasting questions to track AI economic impacts

To get updates on our upcoming report, leave us your email in the signup form at the top of this page!

Motivation

We believe conferences like this one are critical for field-building in the domain of economic impacts of AI, for the following reasons:

There is a lack of concrete research around the economic impacts of specific scenarios involving rapid AI timelines. There’s no compelling models explaining in detail economic futures if no significant governance interventions occur.

In part because of the lack of concrete scenarios, there isn’t sufficient public concern or awareness regarding the urgency of evaluating economic outcomes driven by AI advancements. Most proposals to improve economic futures are still well outside the Overton window.

In particular, we believe that scenario modeling is a compelling and underutilized tool to produce better predictions on uncertain futures:

By focusing on individual scenarios, participants are better able to reason from the same set of assumptions rather than their priors (which can vary widely).

It can reduce uncertainty and provides clear guidelines for easier reasoning.

It allows us to compare and contrast certain outcomes, helping us understand how certain parameters impact society and the economy.

By applying scenario modeling during this conference, we’re developing a much clearer understanding of the perspective of leading economists in the case of rapid AI timelines. We believe conferences such as ours will lead to the creation of new research agendas and projects tackling outstanding uncertainties in this domain.

Attendees

We had 30 total participants, with 20 attendees and 10 organizers from Convergence and Metaculus. Our participants included representatives from major AI labs, universities, AI safety non-profits, including the following organizations: Google, UN, Partnership on AI, DeepMind, CARMA, OECD, SGH Warsaw School of Economics, ICFG, Stanford, AOI, MIT, FHI, OpenPhil, and OpenAI.

Host Organizations

Convergence Analysis is a non-profit conducting strategic research to mitigate the existential risk posed by AI technologies. We are a leading organization in AI scenario modeling: research clarifying and evaluating possible and important AI scenarios. We also founded and run the AI Scenarios Network, the first cross-organizational network coordinating scenario researchers for AI outcomes.

Metaculus is an online forecasting platform and aggregation engine working to improve human reasoning and coordination on topics of global importance. It offers trustworthy forecasting and modeling infrastructure for forecasters, decision makers, and the public.

The Future of Life Institute focuses on reducing risks from emerging technologies, particularly Artificial Intelligence. Their work consists of grantmaking, educational outreach, and advocating for better policy making in the United Nations, U.S. government and European Union institutions.

Day-By-Day Summary

Day 1: Worldbuilding

At the start of Day 1, we introduced ourselves, provided some context around using scenario modeling as a technique to evaluate future outcomes, and shared a short presentation on why rapid timelines to AI are possible.

Next, we asked participants to consider three categories of scenarios, differentiated by the magnitude of AI capabilities in 2030. We gave them ~30 minutes to read and discuss these scenarios in tables of 4-5 people.

The scenarios were roughly as follows:

Scenario 1: Current AI systems, but with improved capabilities in 2030

Scenario 2: Powerful, narrow AI systems that outperform humans on 95% of well-scoped tasks

Scenario 3: Powerful, general AI systems that outperform humans on all forms of cognitive labor

Next, we moved into the worldbuilding section of the conference. Attendees were given 45 minutes to create some “general” worldbuilding independently (e.g. not focused on their domain of expertise) for each of the 3 scenarios, and then roughly 45 minutes to discuss within their tables of 4-5 people. At the end of the session, facilitators from each table presented a summary, commonalities, and cruxes from each table discussion.

After lunch and a forecasting session (discussed later), attendees did the same worldbuilding exercise, but with a focus on a deep-dive into their areas of expertise. We mixed them into new groups so they could meet more people. They had more time to discuss and to present their findings after lunch, with this work taking much of the rest of the day.

Just from these sessions, we’ve received ~100 pages of writing and are starting the process of reading them and consolidating conclusions from them now. Some common themes include:

Significant unemployment stemming from AI automation

Increases in wealth and income inequality

Changes to the cost of goods and services

Impacts on the productivity / growth rate of developed countries

Increases in power concentration

Day 2: Economic Causal Models

The second day, we introduced attendees to the concepts behind economic causal models, which are a lightweight way for attendees to model cruxes and key variables / metrics involved in their economic worldviews. We had them self-sort into groups based on their top-level metric of interest, resulting in 2 groups around Growth (e.g. GDP), 2 groups on Inequality (1 inter-country, 1 intra-country), and 1 group on Quality of Life.

In their groups, they brainstormed intermediate variables that affected these top-level metrics and created a high-level map of their economic worldview. Over the course of the day, we had them draw progressively more complex causal models incorporating more features. For example, we had them:

Incorporate the idea of “AI observables”, or real-world measurables that might serve as early indicators for changes in variables

Break down variables (e.g. labor automation) by its sub-components, such as sectors of the economy, regions (developed vs developing countries), or skill level (high vs. low).

Create predictions based on Scenario 3 using their model, identifying possible quantitative changes that permeated through their diagram.

Here are some examples of the types of diagrams our attendees created:

During the creation of these diagrams, attendees participated in table discussions about the most important cruxes, or variables, that will eventually impact the top-level variables (growth, inequality, quality of life) that they care about. After the sessions, we had them answer a series of questions explaining their reasoning.

We’ll be publishing the economic diagrams generated by our attendees, as well as text summaries of their models in a report scheduled to be released in Q1 2025.

Forecasting Sessions

During roughly three 1-hour sessions led by Metaculus over the course of the conference, we had attendees participate in a variety of forecasting exercises to generate interesting results and challenge their reasoning.

On Day 1, participants were given a brief introduction to forecasting, and asked to spend ~30 minutes creating detailed forecasts on a variety of economic questions. Metaculus will shortly publish these results on a public page.

On Day 2, Metaculus hosted a series of mock debates on key economic concepts. They divided the room into 4 groups, where each group took a single position on one of the following questions:

Will unemployment in 2030 go up or down?

Will the labor share of GDP in 2030 go up or down?

Each group discussed for 10 minutes, elected 1-2 expert representatives, and these representatives participated in a mock debate (including rebuttals) on why their forecasts for their question would hold true.

Finally, we also had them generate interesting forecasting questions on both Day 1 and Day 2, based on the discussions and writing that they conducted on each day. We’ll be publishing these forecasting questions in our report in Q1.

Attendee Feedback

We collected feedback at the end of the conference via a Google Form. Additionally, we wrote down a good amount of verbal feedback throughout the conference, and had a short feedback session at the end of Day 1.

Overall opinion and value of the conference: Most attendees found the conference valuable both personally and for the ecosystem, highlighting the opportunity to refine their thinking about AI's economic impacts. A number appreciated the combination of structured activities and informal discussions, with participants noting that the conference helped them better understand economic effects of AI and create valuable connections.

Overall satisfaction rating: Satisfaction ratings predominantly ranged from 7 to 10 out of 10, with most scores clustering around 8-9. One notable outlier gave a score of 3.

Set of attendees/composition: The attendee composition received generally positive feedback, with participants praising the diversity of backgrounds and expertise. However, a consistent theme emerged regarding the need for more economists. Attendees appreciated the group's willingness to engage in evidence-based discussions and make space for all perspectives.

Recommended improvements: Improvement suggestions centered around several key themes: structuring the exercises more effectively (particularly the Day 2 modeling sessions), providing clearer explanations of exercise purposes, focusing on fewer topics in greater depth, and ensuring balanced expertise across discussion groups.

Other specific feedback/thoughts: Attendees praised the smooth organization, venue quality, and responsiveness of the organizers. Some suggested extending the timeline to 2035 to account for economic lag effects, while others recommended more structured debate formats and better data incorporation.

In Summary

Overall, we were quite pleased with the overall conference experience, both for ourselves and our attendees! We had no real mishaps and attendees seemed to significantly enjoy the focus and methodology of the conference. We’ve ended up with ~150 pages of writing from attendees to work through, many economic causal diagrams, and ~20 pages of forecasts & forecasting questions.

After starting to work through the results, we’re feeling confident that there will be many interesting takeaways from a report that will help kick off new research and projects in this domain.

To get updates on our upcoming report from this conference in Q1, leave us your email in the signup form at the top of this page!

Get research updates from Convergence

Leave us your contact info and we’ll share our latest research, partnerships, and projects as they're released.

You may opt out at any time. View our Privacy Policy.